The convergence of LiDAR (Light Detection and Ranging) with augmented reality (AR), virtual reality (VR), and mixed reality (MR) is accelerating the evolution of immersive systems. Previously hindered by high costs and large form factors, LiDAR sensors have undergone rapid miniaturization and cost optimization. Modern consumer devices now integrate compact LiDAR units capable of generating real-time, high-resolution spatial data essential for immersive computing.

LiDAR Fundamentals: Optical Sensing through Time-of-Flight Measurement

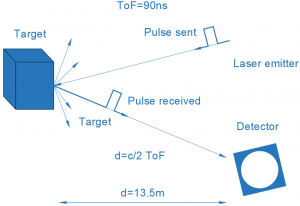

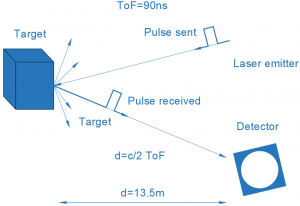

LiDAR systems operate by emitting coherent laser pulses and measuring the time delay between pulse emission and return after surface reflection. This time-of-flight (ToF) technique enables accurate 3D spatial mapping with centimeter- to sub-centimeter-level precision.

Using wavelengths between 250 nm and 11 µm, depending on environmental and application requirements, LiDAR constructs dense point clouds that represent the spatial geometry of objects and surfaces. These point clouds contain not only positional data but also intensity returns, offering insight into material composition, surface reflectivity, and density.

Originally deployed in meteorological and geospatial research—including the 1971 lunar topography missions—LiDAR has now found broad commercial application. Its integration in navigation systems, smart infrastructure, autonomous vehicles, and now AR/VR/MR platforms highlights its growing importance across technical domains.

LiDAR for AR/VR relies on time-of-flight technology to map the shape and contours of objects or environments.

AR/VR/MR Enhancement Through LiDAR Integration

Legacy AR and VR systems relied heavily on monocular or stereo machine vision for environmental sensing. These methods often struggled with dynamic lighting, surface textures, and occlusion challenges. LiDAR addresses these limitations by offering:

- High-fidelity spatial tracking unaffected by ambient lighting

- Real-time scene reconstruction for SLAM (Simultaneous Localization and Mapping)

- Precision object anchoring and occlusion handling

- Dynamic interaction support for multi-user or multi-object environments

The introduction of compact LiDAR modules in mainstream consumer electronics marked a turning point in spatial computing, enabling software developers to create robust, low-latency AR/VR applications with advanced spatial awareness.

Benefits of LiDAR in AR/VR/MR Systems

- Sub-Centimeter Depth Accuracy: Enables realistic occlusion and physical object interaction.

- Scene-Aware Rendering: Allows light-aware rendering and environment mapping in real time.

- Rapid Environment Calibration: Reduces latency in initializing 3D spatial models.

- Robust SLAM Support: Facilitates precise mapping and localization for mobile XR systems.

Key LiDAR Specifications for Immersive System Design

To ensure high performance in AR/VR/MR systems, attention must be paid to several LiDAR system parameters:

1. Detection Range

- Defines the maximum distance at which an object can be sensed.

- Influenced by laser power, pulse frequency, aperture diameter, and environmental conditions.

- Consumer LiDAR typically supports ranges up to 5 meters; extended-range LiDAR (for automotive or industrial use) may exceed 100 meters.

2. Range Precision

- Refers to the consistency of distance measurements across repeated scans.

- High precision minimizes spatial drift in object localization.

3. Range Accuracy

- Measures the deviation between actual object distance and LiDAR estimate.

- Crucial for applications requiring precise environmental overlays or physical interaction.

4. Field of View (FOV)

- Denotes the angular coverage of the sensor’s scanning field.

- Compact LiDAR units for AR headsets or mobile devices may operate with 70°–120° FOV, while full-environment mapping systems may employ 360° scanning mechanisms.

5. Scan Pattern and Resolution

- Describes the method and density of spatial sampling.

- Includes scan line count, angular resolution, and refresh rate—factors directly impacting spatial granularity and system responsiveness.

6. Wavelength and Optical Safety

- Laser wavelengths in the 905 nm and 1550 nm bands balance performance, eye safety, and component cost.

- NIR wavelengths provide strong performance in variable ambient lighting conditions.

How Optical Manufacturers Support AR/VR/MR Engineers

Optical system manufacturers are essential partners in the development of advanced LiDAR-enabled AR/VR/MR solutions. Their contributions span the full engineering lifecycle, including:

1. Custom Optics and Lens Design

- Engineering of aspheric, freeform, or diffractive lenses optimized for compact, high-performance LiDAR modules.

- Design of lens assemblies that minimize chromatic aberration, maintain beam collimation, and manage thermal shifts.

2. Optical Coatings and Filters

- Application of anti-reflective, dielectric, or bandpass coatings to improve SNR and reduce power loss.

- Fabrication of NIR bandpass filters to isolate laser return signals from ambient IR noise.

3. Miniaturization for Embedded Systems

- Development of sub-millimeter precision optical assemblies tailored for compact XR form factors such as smart glasses, AR headsets, and mobile devices.

- Integration of optics with MEMS mirrors, VCSELs, SPAD arrays, and other beam steering components.

4. Optical Simulation and Tolerance Analysis

- Use of optical design software (e.g., Zemax, CODE V, LightTools) to validate system tolerances, ensure manufacturability, and assess beam divergence.

5. Prototyping and Scaling to Production

- Rapid prototyping for concept validation.

- Scalable production infrastructure for transitioning from R&D to commercial deployment.

By engaging with specialized optics manufacturers early in the product design phase, AR/VR/MR engineers can de-risk development, optimize for performance and size constraints, and accelerate time-to-market.

Precision Optics for Immersive Technologies at Shanghai Optics

Shanghai Optics is a leading provider of custom high-precision optics and integrated optical systems for AR/VR/MR and LiDAR applications. With decades of experience in beam-shaping optics, IR-compatible coatings, and optomechanical assembly, Shanghai Optics delivers mission-critical components tailored to stringent performance requirements.

Expertise Includes:

- LiDAR beam-shaping and collimation optics

- Compact optical subsystems for mobile and wearable XR platforms

- NIR filter design and laser window fabrication

- Co-development of optical modules for structured light and ToF applications

Contact Shanghai Optics: Your Optics Partner for XR Innovation

Looking to advance AR/VR/MR system performance through LiDAR integration? Shanghai Optics’s engineering team is ready to support your next-generation design—whether it involves early feasibility assessments or full custom production.